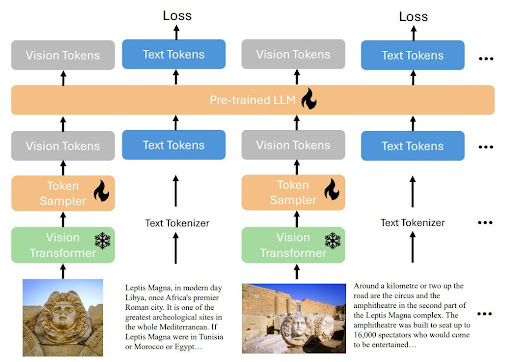

Salesforce AI Research has recently introduced a groundbreaking suite of open-source large multimodal AI models, collectively named xGen-MM (also known as BLIP-3). This release signifies a significant leap forward in the field of AI, as xGen-MM empowers machines to comprehend and generate content that seamlessly integrates text, images, and other data types.

Understanding the Power of xGen-MM

At its core, xGen-MM is a framework designed to handle multimodal source data, encompassing both visual and language information. This groundbreaking capability enables the MM models to perform a wide range of tasks, such as answering questions about multiple images simultaneously or generating descriptive captions for images.

Source: Salesforce AI Research

Key Features and Benefits of xGen-MM

1. Interleaved Data Handling:

The models excel at processing interleaved data, which naturally combines multiple images and text. This capability mirrors human perception and facilitates more nuanced understanding.

2. State-of-the-Art Performance:

The xGen-MM framework includes pre-trained models that have demonstrated exceptional performance on various benchmarks, surpassing many other similar-sized Salesforce open-source models.

3. Open-Source Accessibility:

Salesforce’s decision to make xGen-MM open-source is a game-changer. By sharing the MM models, datasets, and fine-tuning code, Salesforce is democratizing access to cutting-edge multimodal AI technology, fostering innovation and collaboration within the research community.

4. Versatility and Customization:

The framework offers a range of pre-trained models, each optimized for specific tasks. This versatility allows researchers and developers to choose the most suitable model for their applications, tailoring it to their unique requirements.

Revolutionizing AI Applications

The potential applications of Salesforce xGen-MM are vast and far-reaching. Here are a few examples of how this technology can revolutionize various industries:

1. Healthcare:

xGen-MM can assist in medical image analysis, aiding in tasks such as diagnosing diseases, identifying anomalies, and providing personalized treatment recommendations.2. Customer Service:

By understanding both text and visual cues, xGen-MM can enhance customer support interactions, providing more accurate and relevant responses to queries.3. Education:

The models can be used to create interactive educational materials, providing personalized learning experiences and making complex concepts more accessible.4. Creative Industries:

xGen-MM can assist in tasks like generating creative content, such as product descriptions, marketing copy, or even artistic pieces.The Rise of Multimodal AI

Multimodal artificial intelligence (AI) is a rapidly evolving field that focuses on developing systems capable of understanding and processing information from multiple data modalities, such as text, images, audio, and video. Unlike traditional AI, which primarily relies on text-based data, multimodal AI leverages the power of multiple data types to achieve more comprehensive and accurate results.

By combining information from different sources, multimodal AI can better understand complex real-world scenarios and make more informed decisions. For example, a multimodal AI system could analyze a customer’s facial expressions, tone of voice, and written feedback to provide more personalized support.

The Future of Multimodal AI

Beyond Text and Images: The Future of Multimodal AI

xGen-MM represents a significant step towards the future of multimodal AI. By seamlessly integrating text, images, and other data types, these models pave the way for more sophisticated and human-like AI applications. As research progresses, we can expect even more innovative and powerful multimodal AI systems to emerge, transforming the way we interact with technology.

In conclusion, Salesforce’s release of xGen-MM marks a major milestone in the field of AI. By providing a powerful and versatile Salesforce open-source framework, Salesforce is empowering researchers and developers to explore new frontiers in multimodal AI. As this technology continues to evolve, we can anticipate exciting advancements across various industries, from healthcare to creative arts.

Maximize the value of your customer data with AI. Our consultants can help you create tailored experiences that drive customer satisfaction and business growth.

Contact us today to learn more.

FAQs about xGen-MM

1. What is the significance of xGen-MM's ability to handle interleaved data?

Interleaved data, which combines multiple images and text, is a more natural form of multimodal data. This capability allows Salesforce xGen-MM to better understand and process real-world information, leading to more accurate and relevant results.

2. How does xGen-MM compare to other open-source multimodal AI models?

3. What are the potential challenges and limitations of xGen-MM?

4. What are some real-world applications of multimodal AI?

5. How does multimodal AI differ from traditional AI?

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.