Imagine a busy city where two neighbors lived side by side, each known for their unique way of helping the community. One neighbor was famous for always having the answers, like —where to find the best pizza, how to fix a leaky faucet, or the quickest route to the airport—this neighbor always had the perfect information to share.

The other neighbor was known for being the one who could get things done. This neighbor can book a taxi, or send things on the spot, making life easier for everyone by handling tasks directly and immediately.

The first neighbor represents Retrieval-Augmented Generation (RAG) while the latter represents the Model Context Protocol (MCP)— together shaping the future of enterprise AI.

Setting the stage:

The buzz around Model Context Protocol (MCP) is real. Platforms like Zapier, HubSpot (recently launched their MCP server in public beta), and OpenAI (integrating MCP to connect tools directly into their models and APIs) are adopting it. Why? Because MCP standardizes how Large Language Models (LLMs) connect with tools, data, and workflow, models can finally take real action.

But MCP sits alongside another critical technique: Retrieval-Augmented Generation (RAG). RAG enables real-time information retrieval, ensuring answers are current, relevant, and more accurate. MCP is an open standard that allows AI to connect with tools and databases through a single, common interface—like a USB-C port for AI.

Together, they tackle core LLM pain points like outdated training data, hallucinations, and inability to act. Each approach has its own strengths and best-use cases.

Let’s dive in.

Understanding MCP:

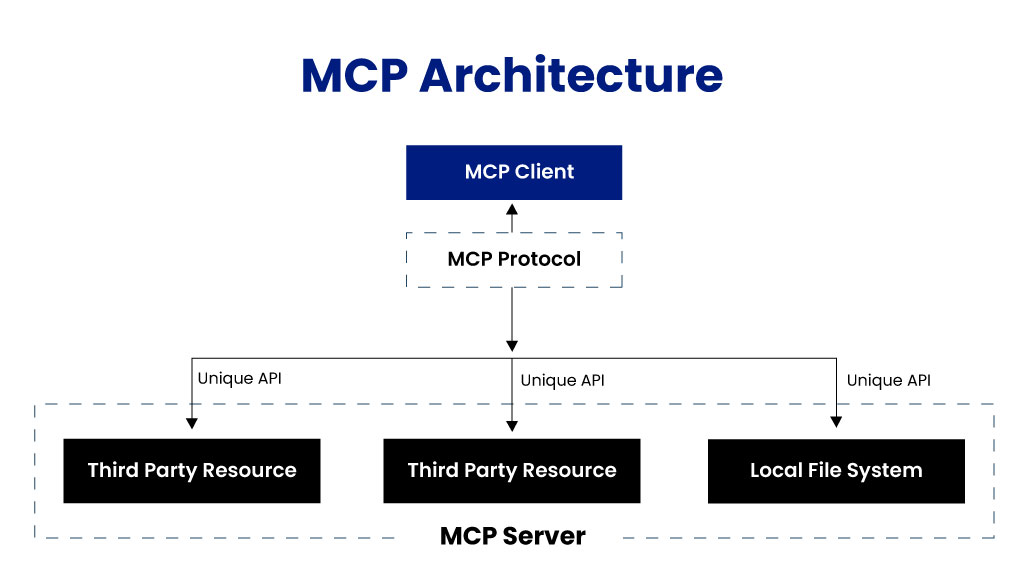

Before MCP, connecting an LLM to external tools or data was messy. Every new system required a custom-built adapter—one-off, brittle, and hard to reuse. Want to connect to a database? Write a custom bridge. Need an API? Another custom connector. Add a new system tomorrow? Back to square one. It’s an efficient process.

MCP fixes this. It’s an open standard that gives LLMs a common way to connect with tools, APIs, and private data. The result? Simpler integrations, faster scaling, and LLMs that can finally do more than just talk.

Core Purpose of MCP

- Standardized Interaction: MCP gives LLMs a common way to connect with tools. No more building custom integrations for every new combination.

- Taking Action: LLMs can now execute actions, like sending an email or pulling the latest stock price, instead of just generating text.

- Solving Integration Headache: With multiple LLMs and countless tools, MCP helps to avoid the M (LLMs)x N (APIs, databases, services, etc.) headache by acting as a single shared protocol.

Understanding RAG:

Large Language Models are powerful, but on their own, they’re limited to what they were trained on. That means answers can be outdated, incomplete, or sometimes just plain wrong.

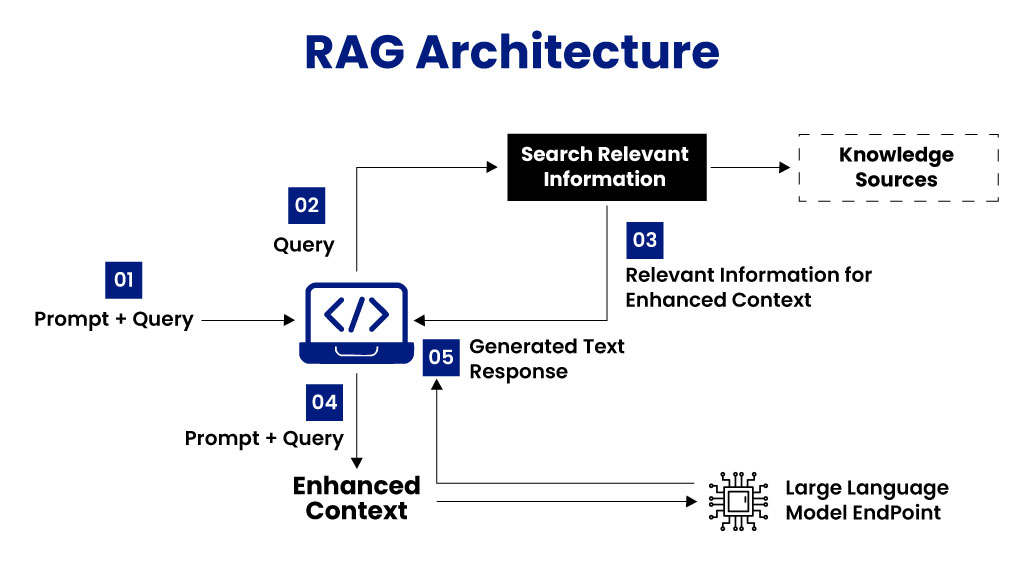

Retrieval-Augmented Generation (RAG) solves this problem by letting LLMs pull in live, external context—from SaaS apps, files, or databases—before answering. Think of it as giving your AI a personal research assistant who checks the right documents before responding.

Core Purpose of RAG

- Grounds Responses in Verified Data: Instead of relying on probabilistic text generation alone, RAG retrieves authoritative information from trusted sources (databases, APIs, internal knowledge bases). This dramatically reduces hallucinations and ensures outputs are backed by evidence.

- Keeps LLMs Fresh and Domain-Specific: Since training data quickly becomes stale, RAG bridges the gap by plugging into real-time or continuously updated data streams. It also allows LLMs to adapt to niche business contexts, making them more valuable for enterprise use cases.

- Delivers Context-Aware Intelligence: By injecting query-specific context into prompts, RAG aligns responses with the user’s intent and organizational data. The result is not just accuracy, but actionable insights tuned to the enterprise’s unique environment.

MCP vs RAG — At a Glance

| Feature | RAG (Retrieval-Augmented Generation) | MCP (Model Context Protocol) |

|---|---|---|

| Primary Goal | Enhance LLM knowledge, improve accuracy, provide current info | Standardize LLM interaction with tools/data, enable action |

| Core Function | Retrieve data → Augment prompt → Generate response | LLM requests tool/resource via client → Server executes/provides → LLM uses result |

| Data Interaction | Read from knowledge bases | Read & Write via tools (e.g., query API, update database) |

| LLM Role | Synthesizes retrieved info to generate informed response | Reasons about tool use, calls tools, interprets results, takes action |

| Nature | A technique/framework for building LLM apps | An open protocol/standard for communication |

| Analogy | LLM’s research assistant + library | LLM’s universal remote control / USB-C port for tools |

How MCP & RAG Complement Each Other:

RAG and MCP is not an either–or choice. The real enterprise value emerges not from treating RAG (Retrieval-Augmented Generation) and MCP (Model Context Protocol) as separate capabilities, but from orchestrating them in tandem.

- RAG as a Tool inside MCP: Think of an MCP server that offers a “knowledge lookup” tool. Under the hood, that’s a RAG pipeline. The AI agent can use it to research something — then immediately use other MCP tools to act on it (like drafting an email or scheduling a post).

- MCP as the Coordinator: In systems with multiple AI agents, some might use RAG for their knowledge needs. MCP acts like the traffic controller — letting these agents share info, delegate tasks, and stay in sync.

- MCP Feeding Context into RAG: MCP can also fetch live context (like a user’s location or status) that makes RAG queries sharper. RAG might pull general info, while MCP brings in user-specific details. Together, the LLM combines both to deliver a more relevant, personalized response.

When Should You Use RAG & MCP?

Here’s how to know which one (or both) to lean on:

Use RAG when…

- You need to bring in external knowledge (docs, articles, research, policies).

- You need updated but relatively stable information pulled directly from your own data sources, reducing hallucinations.

- Your AI should stay up to date without retraining the model.

Use MCP when…

- You need to govern how context is delivered (permissions, compliance, audit trails).

- AI agents must take actions (send an email, file a ticket, update records).

- You care about trust and control — making sure the AI follows enterprise rules.

Use Both RAG + MCP when…

- You want accurate answers that also respect enterprise rules.

- The LLM must combine knowledge retrieval with actions (e.g., research a topic, then draft and send a message).

- You need to personalize results by blending general information (via RAG) with real-time, user-specific context (via MCP).

Trust, compliance, and reliability are as important as intelligence.

How to Get Started:

The shift from experimentation to enterprise AI maturity can be overwhelming. Here’s a practical roadmap:

- Audit Your Context Needs: Where does your AI rely on knowledge—internal, external, or both?

- Identify Critical Use Cases: Customer support, compliance monitoring, financial insights, etc.

- Pilot a Hybrid Approach: Start with RAG, wrap with MCP to enforce structure.

- Scale Intelligently: Standardize MCP as your orchestration layer across departments.

How CEPTES (a Saksoft company) can help?

At CEPTES, with our third-party MCP server, we make it easier for organizations to design, deploy, and scale RAG + MCP pipelines that are secure, governed, and enterprise ready. Whether it’s integrating AI with platforms like Salesforce, orchestrating multi-agent systems, or ensuring compliance in sensitive domains, we can help you in your agentic journey.

If you’re curious to see how MCP transforms real enterprise environments, join us at Dreamforce 2025. As a proud sponsor, we will showcase our AI innovations and live demos—so you can experience the power of MCP in action. You can Book a 1:1 meeting here.

FAQs:

1. Is RAG obsolete with MCP?

2. Are RAG and MCP competitors?

3. Is MCP tied to one LLM provider?

4. Does MCP store your data?

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.