In the ever-evolving world of Artificial Intelligence (AI), the development of large language models (LLMs) has transformed how businesses, researchers, and developers process language data. However, as LLMs grow in complexity, evaluating their performance accurately becomes a crucial but challenging task. Traditionally, human evaluations have been the gold standard for assessing the quality of LLM outputs, but this method is time-consuming and unsustainable as the pace of model development accelerates.

Enter Salesforce AI’s latest innovation – SFR-Judge, a family of powerful judge models designed to assess LLM outputs with remarkable accuracy and efficiency. The SFR-Judge family consists of three models: 8-billion (8B), 12-billion (12B), and 70-billion (70B) parameter models. Built using Meta Llama 3 and Mistral NeMO, these models are reshaping how the AI community evaluates and fine-tunes LLMs by offering a scalable, bias-reduced alternative to human judgments.

The Role of Judge Models in AI

Source: Salesforce AI Research

Judge models like SFR-Judge are critical in evaluating LLM-generated outputs across various tasks. Whether it’s rating responses, comparing outputs, or classifying the quality of text, judge models help researchers determine which AI models perform best and how to improve them. In reinforcement learning scenarios, they also serve as reward models, guiding the behavior of LLMs by encouraging good responses and discouraging poor ones.

One of the key challenges with traditional LLM evaluations is bias. For example, some judge models exhibit length bias, favoring longer responses even if shorter, more concise ones are better. Others may show position bias, where the order of responses influences the model’s judgment. These biases can lead to inconsistent and flawed evaluations, affecting downstream models’ training and real-world performance.

Salesforce’s Solution: The SFR-Judge Models

To address these challenges, Salesforce AI Research has introduced the SFR-Judge family of models, which significantly reduces biases while providing superior judgment capabilities. Developed using Direct Preference Optimization (DPO), SFR-Judge learns from both positive and negative examples, enabling it to make more accurate and consistent evaluations.

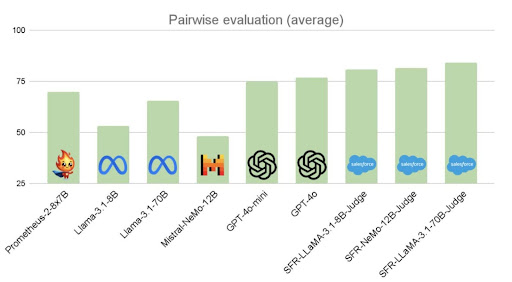

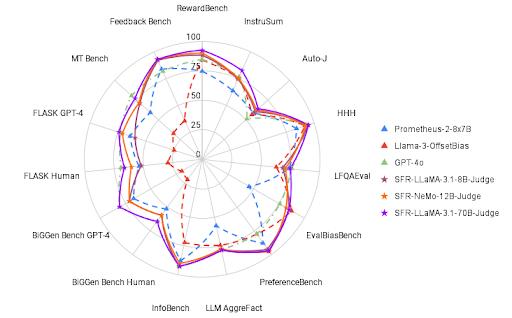

Extensive testing has shown that SFR-Judge excels across various benchmarks, including RewardBench, where it achieved an unprecedented 92.7% accuracy, outperforming proprietary models like GPT-4o. This makes SFR-Judge not only a powerful evaluation tool but also an effective reward model for improving downstream models in reinforcement learning from human feedback (RLHF) scenarios.

Key Features of SFR-Judge Models

1. Superior Accuracy and Performance

SFR-Judge models are tested across 13 benchmarks and outperform competing judge models on 10 of these benchmarks. Their accuracy on RewardBench surpasses 90%, setting a new industry standard for LLM evaluation. Whether used for pairwise comparisons, single ratings, or binary classification, SFR-Judge consistently delivers high-quality judgments.

Source: Salesforce AI Research

2. Bias Mitigation

Thanks to Salesforce’s advanced training techniques, SFR-Judge significantly reduces common biases, such as length and position bias. On EvalBiasBench, a benchmark designed to test judge models for various types of bias, SFR-Judge demonstrated high consistency, ensuring that its judgments remain stable regardless of response order or length.3. Structured Explanations

Unlike many other judge models, SFR-Judge doesn’t operate as a black box. It is trained to provide detailed explanations for its judgments, offering insights into why a particular output was rated higher or lower. This transparency makes it an invaluable tool for both model development and downstream fine-tuning.4. Versatility in Evaluation Tasks

The SFR-Judge family supports a wide range of evaluation tasks, from pairwise comparisons to assessing instruction-following capabilities and safety concerns. This versatility makes it adaptable to different AI scenarios, ensuring that it can be used across multiple domains and applications.How SFR-Judge Enhances Model Fine-Tuning

Beyond evaluation, SFR-Judge also plays a critical role in model fine-tuning through reward modeling. In RLHF, a downstream model generates outputs, and a judge model like SFR-Judge scores these responses. The downstream model is then trained to generate outputs that align with higher-scoring responses. This continuous learning cycle significantly improves the model’s performance.

For example, experiments with SFR-Judge on the AlpacaEval-2 benchmark, which tests instruction-following capabilities, showed that downstream models trained with SFR-Judge’s feedback produced better results than those trained with other judge models. This highlights SFR-Judge’s potential to enhance not only evaluations but also the fine-tuning and deployment of LLMs in real-world applications.

Why SFR-Judge Matters for AI Advancement

The introduction of SFR-Judge by Salesforce AI is a monumental step forward in the field of AI and LLM evaluation. By offering bias-free, scalable, and explainable judgments, SFR-Judge paves the way for more accurate model assessments and better-performing AI systems. Its superior performance on industry-standard benchmarks, coupled with its ability to enhance downstream models, makes SFR-Judge a valuable tool for AI researchers and developers worldwide.

As the demand for more sophisticated AI solutions continues to grow, tools like SFR-Judge will be essential in ensuring that AI systems are reliable, trustworthy, and efficient. Whether you’re working on the next breakthrough in AI or looking to improve existing models, SFR-Judge offers the capabilities needed to take your work to the next level.

Wrapping Up:

Salesforce AI’s SFR-Judge models revolutionize this process, providing bias-free, scalable, and transparent judgments to improve LLM performance. With industry-leading accuracy and reduced biases, SFR-Judge enhances both evaluation and fine-tuning of AI models, driving significant advancements in AI development.

If you’re looking for expert help with Salesforce AI solutions, we’re here to assist. Contact us at contact@ceptes.com, and let’s unlock the full potential of AI together!

FAQs

1. What is SFR-Judge?

2. How is SFR-Judge different from other judge models?

3. What are the key applications of SFR-Judge?

4. What makes SFR-Judge effective in reducing bias?

5. What benchmarks have SFR-Judge excelled in?

SFR-Judge has outperformed other models on 10 of 13 benchmarks, including a 92.7% accuracy on RewardBench, setting a new industry standard for LLM evaluation.

By leveraging Salesforce AI’s SFR-Judge models, you can accelerate your AI development and ensure that your models deliver consistent, high-quality performance across various applications.

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.