Picture this.

You’re in the middle of a trivia night, and your brain draws a blank. You quickly glance at your friend (the know-it-all) for a hint, and bam! you have the answer. That’s kind of what Retrieval Augmented Generation (RAG) does—but for AI.

RAG is like an AI cheat code. Instead of guessing or making stuff up, it retrieves accurate information from trusted sources and augments it into the final response. It’s like combining a top-notch researcher with a creative storyteller.

Let’s dive deeper into the key concepts, how it works and why it’s revolutionizing the Data Cloud & AI game.

What is RAG?

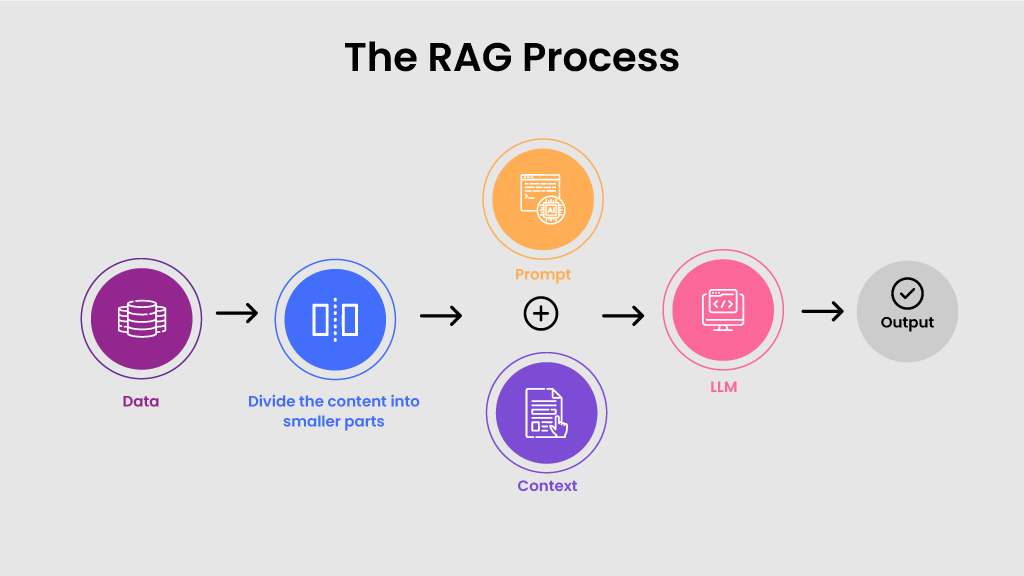

We all agree to the fact that – an AI model is only as powerful as the quality of the data it’s trained on. It needs proper context and reams of factual data to thrive. Retrieval augmented generation is an advanced AI technique that helps companies to embed their most current and relevant proprietary data – structured and unstructured – directly into their LLM prompt to make generative AI more trusted and relevant.

Simply put, RAG helps companies to retrieve data – no matter where it lives – for better AI results. The RAG framework acts as a bridge between a search engine and a language model, handling queries and responses seamlessly. What makes it special? It works with unstructured data like emails, call transcripts, and knowledge articles—data that doesn’t fit neatly into traditional databases.

Let’s break it down:

Retrieval: The AI finds the most relevant data using tools like semantic search, which understands the meaning behind a user’s request, not just the keywords.

Augmented: Instead of pre-training the AI on all data, it adds the right context to the question on the fly, like handing the AI notes before it writes an answer.

Generation: The AI uses this context to create a trustworthy response, like drafting a perfect email reply or a helpful customer service solution, while showing its data sources for accuracy.

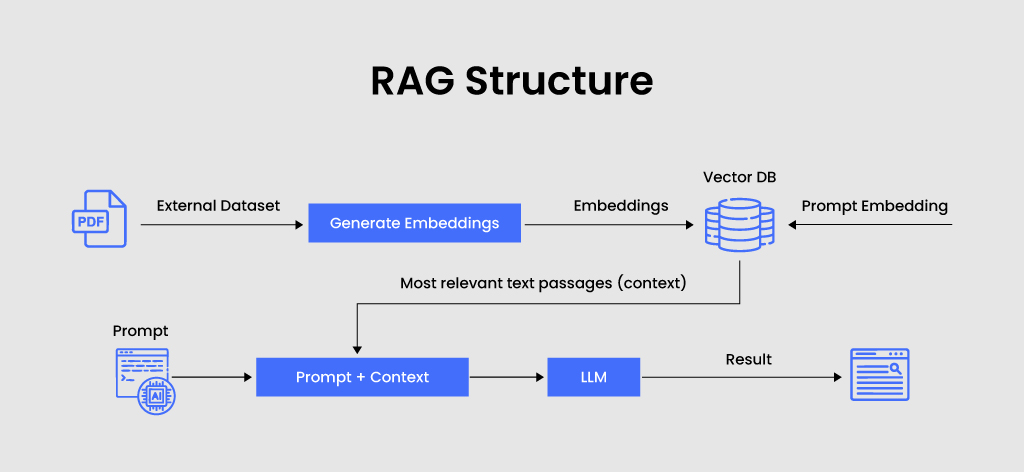

How RAG Works?

Large language models (LLMs) are great at generating text, but they’re trained on general public data—not your company’s specific knowledge. So, when your employees or customers need detailed answers, LLMs might miss the mark or even hallucinate. RAG solves this by combining data retrieval and text generation, from your own trusted data – reducing or eliminating incorrect outputs.

As per a report by Cornell University, RAG-based responses have been shown to be nearly 43% more accurate than those generated by fine-tuned LLMs.

The foundation of Retrieval Augmented Generation is Data Cloud. Companies can improve customer experiences by integrating RAG in Data Cloud. This integration transforms unstructured text into searchable insights, leveraging Data Cloud’s robust framework for data ingestion, transformation, and indexing.

Let’s get into the step by step details of how RAG in data cloud works:

1. Initial query processing:

The AI first processes the user’s input to figure out what they’re asking and understands the intent, context, and specific information requirements of the query.

2. Retrieving external data:

Next, it dives into trusted sources—databases, APIs, or documents—to fetch the most relevant information. The system searches trusted sources like databases, APIs, or documents to find the most relevant information. This step ensures the AI goes beyond its initial data the LLM was trained on, using the latest and most accurate details to craft its response.

3. Data vectorization for relevancy matching:

Data Cloud Vector Database brings structured and unstructured data together by turning it into numerical representations called vector embeddings. This happens through a special type of LLM, called an embedding model, accessed via the Einstein Trust Layer. These embeddings make it easier for AI to analyze data, match it to queries, and generate accurate, meaningful responses.

4. Augment prompts with relevant Data:

Once the relevant external data is found, it’s added to the AI’s prompt in a way that fits smoothly with the original query to keep the query’s context intact. This augmented prompt ensures the AI creates detailed and accurate responses that are fully aligned with the user’s question.

5. Consistent data updates:

Regular updates to external data sources ensure the RAG system stays accurate and relevant. These updates can be automated or scheduled, keeping responses current and reliable for evolving needs.

Challenges of LLMs Vs Solutions by RAG:

| Challenges of LLMs | Solutions by RAG |

|---|---|

| Presentation of false information when an appropriate answer is not available. | RAG instructs the LLM to retrieve data from authoritative sources, defined in advance – reducing the scope for errors. |

| Outdated or too general information when users expect a specific and up-to-date answer. | RAG retrieves up-to-date information, ensuring answers are relevant and current. |

| Inaccurate response due to terminological confusion where different sources use the same terminology for different concepts. | RAG allows organizations to control the quality of the model’s output by customizing knowledge sources. |

Practical Use Cases of Retrieval Augmented Language Model:

Advanced Question-Answering Systems:

RAG models improve question-answering systems by fetching and generating accurate responses. For example, healthcare systems can use RAG to answer medical queries by pulling data from medical literature.

Content Creation and Summarization:

RAG simplifies content creation by pulling relevant data from various sources and generating high-quality articles or summaries. News agencies can use it to write articles or summarize lengthy reports automatically.

Conversational Agents and Chatbots:

RAG boosts chatbots and virtual assistants by providing accurate, context-based responses, making them more helpful in customer service or personal assistance.

Information Retrieval:

Search engines powered by RAG deliver more accurate results and create useful snippets to summarize content, improving the search experience.

Educational Tools:

RAG enhances learning tools by generating personalized explanations, questions, and study materials, tailoring the experience to individual learners.

Legal Research:

RAG can speed up legal research by retrieving relevant cases, drafting documents, and helping lawyers analyze information with ease and accuracy.

Content Recommendation Systems:

RAG powers advanced content recommendation systems across digital platforms by understanding user preferences, retrieving relevant content, and generating personalized suggestions for better engagement.

How RAG Works with Agentforce and Data Cloud

Let’s understand that – the actions of RAG, Data Cloud and Agentforce are interrelated. When a user interacts with Agentforce, the RAG model leverages the Data Cloud to retrieve precise, up-to-date information and integrate it into the AI’s response generation process. This dynamic interaction ensures that AI outputs are not generic but tailored to the most current and relevant business data, boosting the reliability and accuracy of AI-driven insights and decisions.

For instance, imagine a customer service manager asking Agentforce for a detailed analysis of unresolved support cases. The RAG model retrieves real-time data on open cases, customer feedback, and support agent performance from the Data Cloud. This enables Agentforce to produce a comprehensive report highlighting bottlenecks, high-priority issues, and areas needing immediate attention. With this actionable information, the manager can allocate resources effectively, improve resolution times, and enhance overall customer satisfaction.

By seamlessly connecting Data Cloud’s robust data management with Agentforce’s AI capabilities, RAG empowers businesses to make smarter, faster decisions rooted in real-time insights.

Future of RAG: What We Can Expect

The integration of Retrieval Augmented Generation (RAG) into Data Cloud has unlocked advanced customization for information retrieval. Users can now tailor how data is ingested, transformed, and utilized. With retrievers natively integrated into Prompt Builder, query objects and requirements can be configured directly within the prompt engineering process. This evolution transforms RAG from a static tool into a transparent and highly configurable feature within the Salesforce ecosystem.

Retrieval augmented generation (RAG) combines the best of retrieval and generative models to create more accurate, context-rich text, even if it’s a bit more complex than using a standalone LLM. By blending retrieval with generation, RAG ensures higher-quality AI outputs, while semantic search focuses on finding the most relevant information by understanding the meaning behind queries. Together, they make NLP smarter and more effective!

For more Data Cloud and Agentforce consultation, feel free to contact us.

FAQs

1. How does RAG handle privacy concerns with sensitive information?

2. How does RAG compare to traditional retraining for LLM performance enhancement?

3. What are the key challenges in implementing RAG models?

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.