Today, businesses are all about data. Data from different sources, the CRM, the ERP, the marketing platform, the commerce platform and what not. And to be honest, it’s not even just about data, it’s about how you put it to use. It’s about what you derive from it; it’s about how you drive personalized experiences and maximize efficiency.

And this is where Salesforce Data Cloud steps in. Salesforce Data Cloud (formerly known as Customer Data Platform) enables organizations to unify, analyze, and activate data across various touchpoints.

Like all other Salesforce core clouds like the Sales Cloud, Service Cloud, Salesforce Data Cloud Architecture takes another route. This blog explores the Salesforce Data Cloud architecture, breaking down its key components and functionalities, drawing insights from top industry sources.

The Foundation

The backbone of Salesforce Data Cloud is Salesforce’s Hyperforce, a modern cloud infrastructure that allows Salesforce products to run on leading public cloud platforms. The primary cloud infrastructure for Salesforce Data Cloud is Amazon Web Services (AWS), which provides a robust and scalable environment for data storage, computing, and analytics. Key AWS services utilized include:

- Amazon S3: For high-capacity, scalable data storage.

- Amazon Redshift: For data warehousing, enabling fast querying and analytics.

- AWS Lambda: For serverless computing, allowing real-time data processing and automation.

- Amazon EMR: For big data processing using frameworks like Apache Spark and Hadoop.

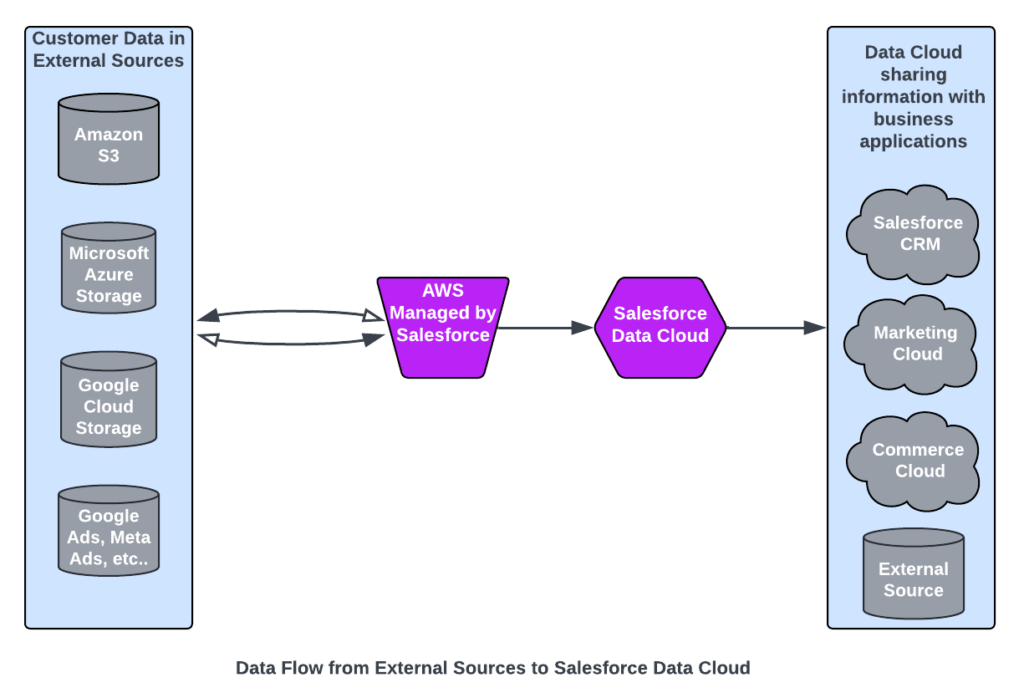

Additionally, Hyperforce offers multi-cloud flexibility by enabling Salesforce Data Cloud to run on other major cloud providers, such as Microsoft Azure and Google Cloud. This approach ensures compliance with regional data residency requirements and offers greater deployment flexibility for global enterprises.

Out of the ordinary Data Storage

Unlike the usual suspects like Sales Cloud and Service Cloud, Salesforce Data Cloud plays by its own rules with a completely different architecture and tech stack. Instead of the traditional setup, it uses a modern “Data Lakehouse” architecture, where all data lives in S3 buckets using the Apache Parquet file format.

When it comes to storage, Data Cloud uses a smart mix of services to keep things fast and scalable. DynamoDB handles hot storage, delivering quick data retrieval, while Amazon S3 takes care of cold storage for long-term data needs. There’s also a SQL metadata store in the mix to keep metadata well-organized. This setup isn’t just about storing tons of data—it’s about doing it at a petabyte scale, bypassing the usual performance and scalability hurdles of traditional relational databases.

To make Salesforce data management even smoother, Data Cloud uses Apache Iceberg as an abstraction layer. Think of it as a bridge that connects raw data files with organized table structures, allowing seamless integration with advanced data processing frameworks.

Now that the foundation and the storage is set, let us understand the rest of the Salesforce Data Cloud architecture.

A Different Take on the models

Let us understand the different model approach in Data Cloud with a cute little story of a village named Data CloudVille.

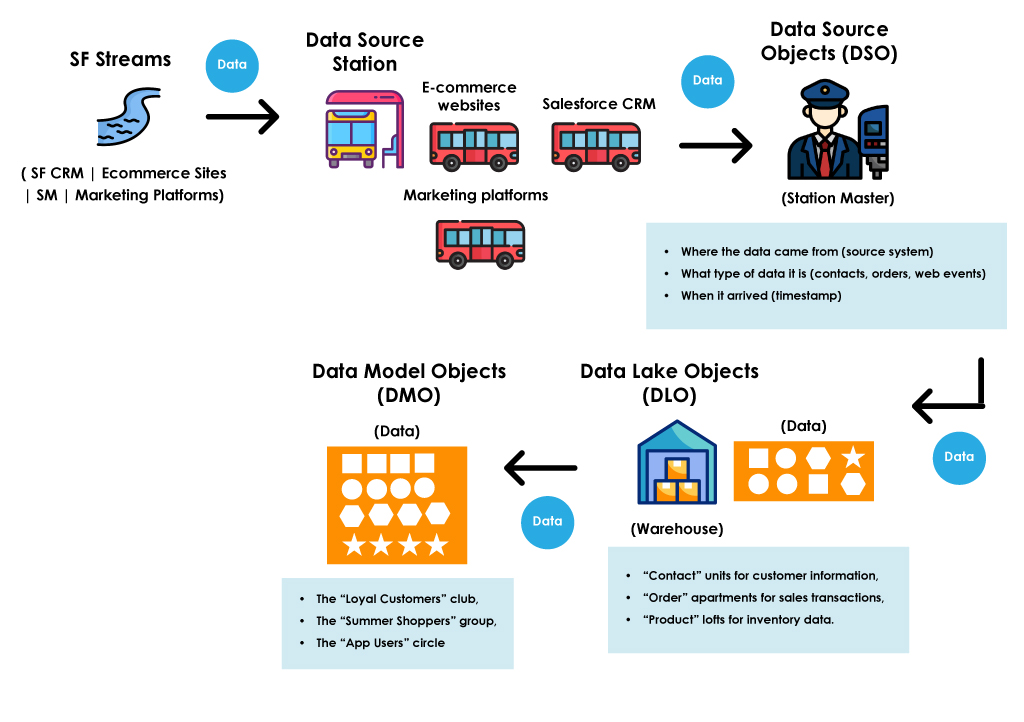

Let’s take a stroll through the neighborhood and meet the residents—each with a unique role in this vibrant community. Our journey starts at the Salesforce Streams River, the lifeline of Data CloudVille. This river constantly carries fresh streams of data from all over—Salesforce CRM, e-commerce sites, social media, marketing platforms, IoT devices, and more.

Unlike old-school data collection methods, where information arrived in batches, Salesforce Streams keeps the water (data) flowing in real-time. That means businesses always have the most up-to-date information about their customers.

Now, let’s follow the data as it makes its way through the village.

Our journey begins at the Data Source Station, where buses filled with data arrive daily. These buses come from all over—Salesforce CRM, e-commerce websites, marketing platforms, and more.

At the station, the Data Source Objects (DSO) act as the station manager, keeping track of every bus and its passengers in the Salesforce orgs. It logs:

- Where the data came from (source system)

- What type of data it is (contacts, orders, web events)

- When it arrived (timestamp)

However, the DSO doesn’t unpack the luggage. It just records who’s here, waiting for the next step.

From the bus station, data moves to a huge warehouse called the Data Lake Object (DLO). Unlike the station manager, the DLO stores the actual data—every record, every detail—just as it arrived.

Inside the warehouse, data can stay as-is, whether it’s a neatly packed customer profile or a messy web clickstream. The DLO is perfect for storing raw data before it’s cleaned up or organized.

It’s a bit chaotic in here, but that’s okay—the DLO is all about holding data until it’s ready for processing.

After their stay in the warehouse, some data get an upgrade to the tidy and well-organized Data Model Complex, called the Data Model Objects.

To move into this neighborhood, data needs to get cleaned up, structured, and mapped into specific formats. They must fit into predefined apartments like:

- “Contact” units for customer information,

- “Order” apartments for sales transactions,

- “Product” lofts for inventory data.

Here, data finds a permanent residence, ready to contribute to insights and business processes.

Every community has its clubs and groups. In Data Cloudville, these are represented by the Segment Object.

Data with shared interests or behaviors join different clubs:

- The “Loyal Customers” club,

- The “Summer Shoppers” group,

- The “App Users” circle.

These clubs are dynamic- data can join or leave as their behavior changes. The Segment Object keeps track of who’s in each club, ensuring businesses can engage the right audience.

With smart insights and segmented groups, it’s time to take action! The Activation Target Object is the delivery service of Data Cloudville, carrying important messages beyond the town’s borders.

Whether it’s sending a customer segment to a marketing platform or sharing insights with a sales team, the Activation Target Object ensures data reaches its final destination, ready to make an impact.

The “Zero-Copy” Approach

The zero-copy architecture approach in Salesforce Data Cloud architecture allows businesses to access and analyze data directly from their existing data lakes or data warehouses—such as Snowflake or Amazon Redshift—without needing to physically copy or move the data into Salesforce. Instead of duplicating data, this method establishes a secure connection to the external data source, enabling real-time data integration and analysis.

The zero copy architecture approach reduces data redundancy, improves efficiency, and accelerates insights by eliminating the need for time-consuming ETL (Extract, Transform, Load) processes, keeping data in its original source while leveraging Salesforce’s analytics and activation capabilities.

It ensures faster time-to-insight while maintaining data governance and minimizing storage costs.

Wrap-up

Salesforce Data Cloud architecture empowers organizations to unify, analyze, and activate customer data in real-time, driving hyper-personalized experiences and better business outcomes. By leveraging its scalable infrastructure, AI-driven insights, and seamless integrations, businesses can stay ahead in the competitive landscape.

If you’re looking to harness the power of Salesforce Data Cloud for your business, our team can help you navigate its architecture and implementation. Contact us today!

FAQ:

1. What makes Salesforce Data Cloud different from other Salesforce Clouds?

2. How does the Data Streams feature help businesses?

3. What is the role of Zero-Copy Architecture in Data Cloud?

4. How does Salesforce Data Cloud ensure data security and compliance?

5. Can Data Cloud integrate with other Salesforce products?

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.