What is a Large Action Model (LAM)?

If I had to simply put it across, an Large Action Model is a generative AI model that can not only understand and interpret what a human says and wants, but can also take a relevant action upon it. Just imagine it as an extended version of an LLM, which can understand the intention behind a human prompt, and also take an executable action upon it.

Let us understand it with an example and a comparison. Large language models can easily write a piece of content like a reply to an escalation email based on a human prompt, but a LAM can automate actions like generating a report, sending emails, or even controlling robotic systems based on the given input. These models aim to bridge the gap between AI understanding and tangible real-world applications.

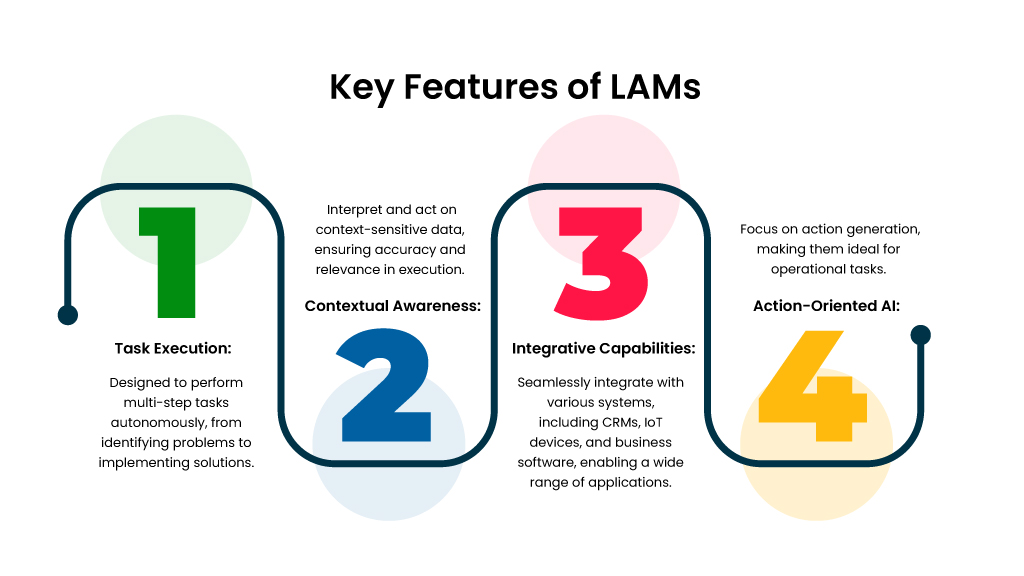

So all in all, LAMs are designed to perform multi-step tasks autonomously, from identifying problems to implementing solutions.

How Large Action Models Work- Unraveling the different layers

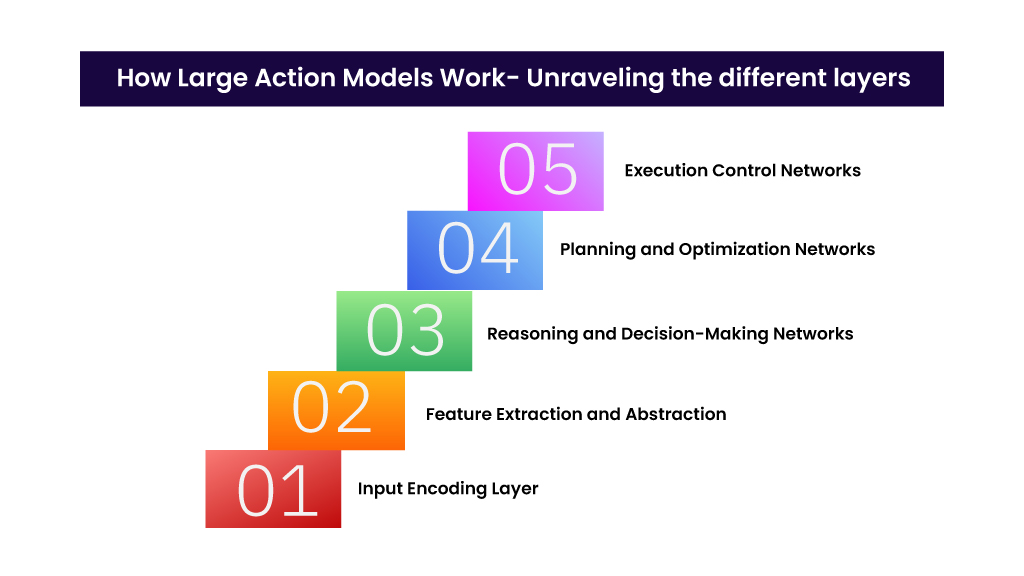

Neural networks are the backbone of Large Action Models (LAMs), providing the computational framework that enables these models to process complex data, reason effectively, and execute sophisticated actions. These networks are typically deep and multi-layered, tailored to the diverse requirements of LAMs. Here’s how neural networks fit into the structure of LAMs:

1. Input Encoding Layer:

Neural networks in LAMs start with an input encoding layer that translates raw data into numerical representations (embeddings). For example:

- Text data is processed using word embeddings or transformers like BERT or GPT.

- Image data is processed using convolutional neural networks (CNNs) for feature extraction.

- Numerical or tabular data uses dense layers to capture patterns and relationships.

This encoding ensures diverse inputs can be uniformly processed within the LAM.

2. Feature Extraction and Abstraction:

Deep neural networks (DNNs) within the perception layer identify patterns and abstractions from the encoded data. Specialized architectures include:

- Recurrent Neural Networks (RNNs) or Transformers for temporal and sequential data, capturing relationships across time or sequence.

- Attention Mechanisms to focus on the most relevant features in large datasets, improving efficiency and precision.

This stage converts raw inputs into meaningful, high-level features that LAMs can analyze and act upon.

3. Reasoning and Decision-Making Networks:

The reasoning layer relies on neural networks, such as:

- Reinforcement Learning (RL) Agents: Deep Q-Networks (DQN) or policy gradient methods enable decision-making by learning optimal actions through trial and feedback.

- Graph Neural Networks (GNNs): These are used for relational data, helping LAMs understand and reason about networks and dependencies.

These networks simulate human-like cognitive abilities, allowing LAMs to evaluate multiple options and select the most suitable action plan.

4. Planning and Optimization Networks:

Neural networks assist in breaking down complex goals into executable tasks:

- Generative Adversarial Networks (GANs) or Transformers can simulate and predict outcomes, aiding in scenario planning.

- Recurrent and Feedforward Networks create iterative action plans, optimizing for time, cost, or resource efficiency.

5. Execution Control Networks:

Execution involves real-time responsiveness, often powered by:

- Recurrent Neural Networks (RNNs) or LSTMs for maintaining state-awareness during multi-step tasks.

- Specialized control systems that integrate with APIs, IoT devices, or robotic systems for action execution.

For example, in a warehouse setting, a LAM integrated with robotic systems can identify inventory shortages, generate a procurement order, and navigate autonomous robots to restock items.

Applications of Large Action Models

Large Action Models (LAMs) are versatile AI systems capable of performing complex, multi-step tasks, making them valuable across various industries. Their ability to process diverse data types, reason intelligently, and execute actions autonomously enables them to transform workflows and enhance decision-making. Below are key application areas for LAMs:

1. Autonomous Process Automation

- Industry: Manufacturing, Logistics, Finance

- LAMs automate repetitive and multi-step processes, such as supply chain optimization, predictive maintenance, and invoice processing. They adapt to real-time data and adjust actions to maintain efficiency and accuracy.

2. Customer Interaction and Support

- Industry: Retail, Telecommunications, Healthcare

- LAMs enable hyper-personalized interactions through omnichannel customer support systems, AI-powered chatbots, and automated ticket resolution. They improve response times and customer satisfaction by analyzing user behavior and predicting needs.

3. Strategic Planning and Decision Support

- Industry: Financial Services, Business Consulting

- LAMs assist in portfolio management, risk assessment, and long-term planning. They use predictive models to analyze market trends and recommend actionable strategies for growth and cost reduction.

4. Healthcare and Life Sciences

Applications:

- Drug discovery: LAMs accelerate research by analyzing biological data and simulating drug interactions.

- Patient care: They provide tailored treatment recommendations and manage patient monitoring in real-time.

- Hospital logistics: LAMs optimize resource allocation, such as bed availability and staff scheduling.

5. Cybersecurity and Risk Management

- Industry: IT, Finance, Government

- LAMs monitor systems for potential threats, automate vulnerability patching, and respond to cyberattacks in real-time, ensuring robust security frameworks.

Rabbit Large Action Model: A Revolutionary Approach

The Rabbit r1 is a compact, AI-powered personal assistant device developed by Rabbit AI., introduced in January 2024. Designed to streamline daily tasks through voice commands and touch interactions, it operates on Rabbit OS, an Android-based system, and utilizes a Large Action Model (LAM) to interpret and execute user instructions across various applications.

The device executes tasks such as web searches, media playback, and service bookings through voice commands. It integrates with services like Spotify and Uber, enabling actions like playing music or ordering transportation.

Upon release, the Rabbit r1 garnered significant attention, with initial batches selling out rapidly.

However, it faced criticism regarding its functionality and performance, with some reviewers questioning its advantages over smartphones.

In summary, the Rabbit r1 represents an innovative approach to personal AI assistants, offering a unique blend of hardware and software designed to simplify user interactions with technology.

Rabbit LAM stood out due to its ability to:

- Interpret High-Volume Data: Analyze and process large datasets in real-time.

- Enable Precise Automation: Perform tasks with minimal human intervention, enhancing efficiency.

- Adapt to Dynamic Environments: Adjust actions based on changing conditions or input variables.

LAM vs LLM: Key Differences

While LLMs are great at language processing and communication related tasks, LAMs are action-oriented, real-world integrated systems. Here, we have listed the key differences between LAMs and LLMs based on different aspects like purpose, architecture etc. for better understanding.

| Aspect | Large Action Models (LAMs) | Large Language Models (LLMs) |

|---|---|---|

| Purpose | Designed to perform multi-step actions and decision-making autonomously. | Focused on generating, interpreting, and understanding natural language. |

| Primary Functionality | Executes tasks, plans workflows, and interacts with real-world systems. | Generates text, answers questions, and summarizes or explains information. |

| Core Architecture | Combines diverse neural network types like reinforcement learning, GNNs, and transformers for action planning. | Primarily transformer-based architectures (e.g., GPT, BERT) optimized for language understanding and generation. |

| Data Inputs | Multi-modal: Handles text, images, real-time sensor data, and structured data. | Primarily text-based, with some models handling multimodal inputs like images and code. |

| Action Execution | Directly interacts with external systems, APIs, or devices to execute tasks. | Produces textual outputs that may guide users but does not inherently execute actions. |

| Integration | Seamlessly integrates with IoT, robotics, and enterprise systems for real-world impact. | Integrates into chatbots, virtual assistants, and text-based systems for language-based interactions. |

| Real-Time Use | Handles real-time scenarios like autonomous systems, resource management, and logistics. | Responds in real-time for conversational use cases but lacks inherent action-oriented capabilities. |

| Applications | Used in robotics, autonomous systems, healthcare workflows, and enterprise automation. | Applied in content creation, customer support, sentiment analysis, and conversational AI. |

LAMs Powering AI Agents

Large Action Models (LAMs) are revolutionizing AI agents by enabling them to go beyond simple responses and perform complex, multi-step tasks autonomously. Unlike traditional AI that focuses on specific outputs like generating text or identifying objects, LAMs empower agents to process diverse data, reason intelligently, and make decisions in real-time. They allow AI agents to adapt dynamically to changing environments, plan actions, and interact seamlessly with external systems like IoT devices or APIs. For instance, in logistics, a LAM-powered agent can analyze supply chain data, predict delays, and proactively adjust delivery schedules—all without human intervention. By combining deep learning, reinforcement learning, and multi-modal data processing, LAMs are turning AI agents into versatile, problem-solving tools capable of driving efficiency and innovation across industries.

Wrap-up

Large Action Models represent the next frontier in AI innovation. By moving beyond mere understanding and into actionable intelligence, LAMs have the potential to transform industries and redefine how we interact with technology. Whether it’s the Rabbit Large Action Model or other LAM frameworks, these models are paving the way for a future where AI doesn’t just assist—it acts. As generative AI and LAMs converge, the possibilities are limitless, making now the perfect time to explore their applications and potential for your business.

Want to know more about how AI Agents can empower your business, contact our experts here!

FAQ:

1. What are Large Action Models (LAM)?

2. How do LAMs differ from Large Language Models (LLMs)?

3. What are some key features of LAMs?

4. In what industries are LAMs most useful?

5. What makes Rabbit’s Large Action Model unique?

Nilamani Das

Nilamani is a thought leader who champions the integration of AI, Data, CRM and Trust to craft impactful marketing strategies. He carries 25+ years of expertise in the technology industry with expertise in Go-to-Market Strategy, Marketing, Digital Transformation, Vision Development and Business Innovation.